Seongmin Lee

+1 (310) 806-7239

University of California, Los Angeles

Computer Science Department

Engineering VI, Room 486

Los Angeles, CA 90095

I am a postdoctoral researcher at the Software Evolution and Analysis Laboratory (SEAL) at UCLA, working with Prof. Miryung Kim.

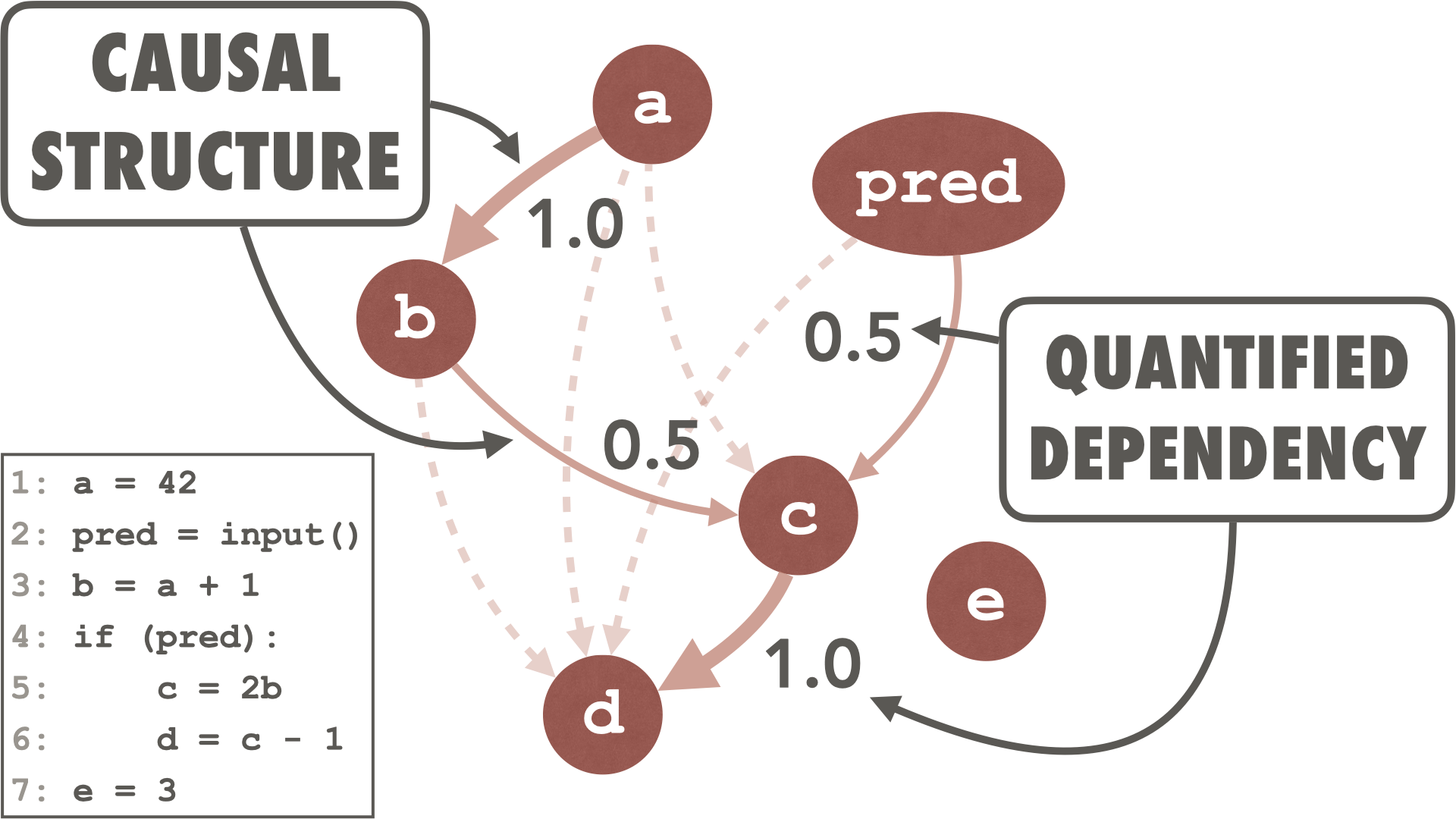

My research interests lie in program analysis and software testing, with a focus on making program analysis scalable to address the challenges associated with the scale and complexity of software systems. I aim to achieve practical software testing in real-world scenarios by utilizing statistical methods to analyze dynamic information from program execution, facilitating the reasoning of a program’s semantic properties and addressing empirical challenges in software testing.

Prior to joining SEAL, I was a postdoctoral researcher at the Max Planck Institute for Security and Privacy (MPI-SP) in Bochum, Germany, where I worked with Dr. Marcel Böhme in the Software Security group. I received my Ph.D. in Computation Intelligence and Software Engineering Lab (COINSE) at KAIST, advised by Dr. Shin Yoo in 2022.

news

| Dec, 2025 | |

|---|---|

| Oct, 2025 | |

| Sep, 2025 | |

| Jul, 2025 | |

| Jun, 2025 | |

selected publications

- FSEIn Bugs We Trust? On Measuring the Randomness of a Fuzzer Benchmarking OutcomeIn Proceedings of the ACM International Conference on the Foundations of Software Engineering, Jul 2026